Protect your digital space with DeepBrain AI’s online Deepfake Detector, designed to quickly and accurately identify AI-generated content in minutes.

Easily recognize advanced deepfake videos that are challenging to detect with the naked eye.

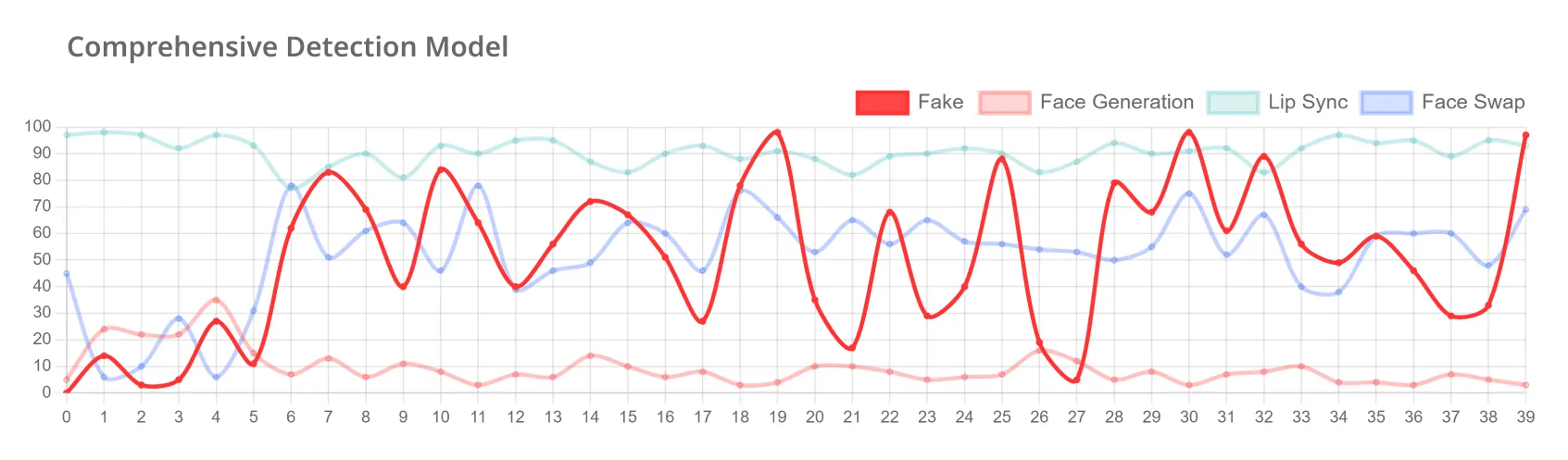

Powered by advanced deep learning algorithms, DeepBrain AI's deepfake identification tool examines various elements of video content to effectively differentiate and detect different types of synthetic media manipulations.

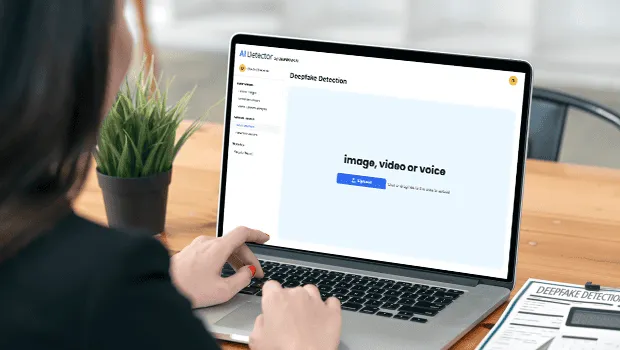

Upload your video, and our AI will quickly analyze it, providing an accurate assessment within five minutes to determine if it has been created using deepfake or AI technology.

We accurately detect various deepfake forms—such as face swaps, lip sync manipulations, and AI-generated videos—ensuring you engage with authentic and trustworthy content.

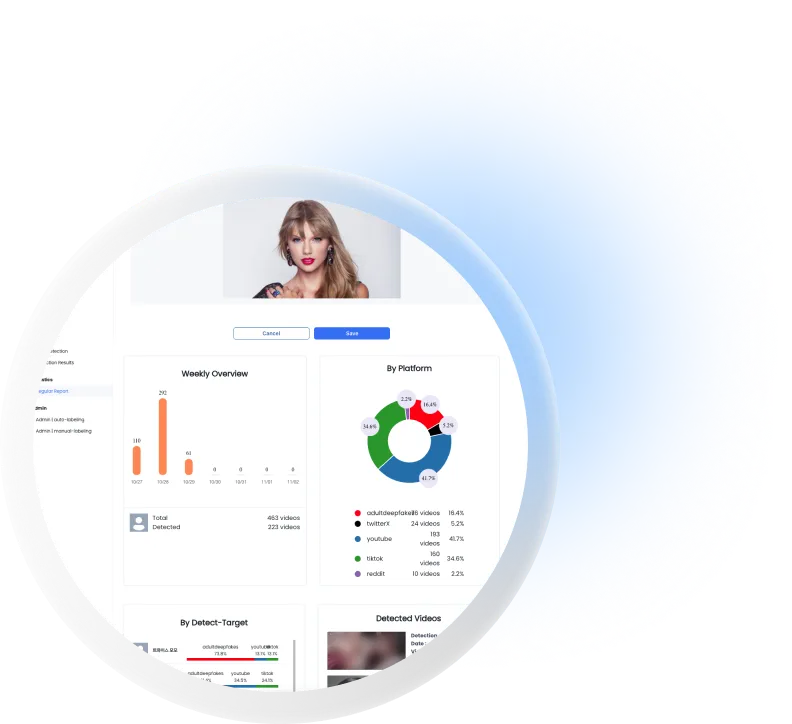

Quickly and accurately detect manipulated videos and media to protect against a wide range of deepfake crimes. DeepBrain AI’s detection solution helps prevent fraud, identity theft, personal exploitation, and misinformation campaigns.

We continuously advance our technology to combat deepfakes, protecting vulnerable groups and providing actionable insights against digital exploitation. We're committed to empowering organizations to safeguard digital integrity effectively.

We provide our solutions and partner with law enforcement, including South Korea's National Police Agency, to improve our deepfake detection software for quicker responses to related crimes.

DeepBrain AI was selected by South Korea's Ministry of Science and ICT to lead the "Deepfake Manipulation Video AI Data" project in collaboration with Seoul National University's AI Research Lab (DASIL).

We provide a one-month free demo to businesses, government agencies, and educational institutions to combat AI-generated video crimes and enhance their response capabilities.

Check out our FAQ for quick answers on our deepfake detection solution.

A deepfake is synthetic media created using artificial intelligence and machine learning techniques. It typically involves manipulating or generating visual and audio content to make it appear as if a person has said or done something that they haven't in reality. Deepfakes can range from face swaps in videos to entirely AI-generated images or voices that mimic real people with a high degree of realism.

DeepBrain AI's deepfake detection solution is designed to identify and filter out AI-generated fake content. It can spot various types of deepfakes, including face swaps, lip syncs, and AI/computer-generated videos. The system works by comparing suspicious content with original data to verify authenticity. This technology helps prevent potential harm from deepfakes and supports criminal investigations. By quickly flagging artificial content, DeepBrain AI's solution aims to protect individuals and organizations from deepfake-related threats.

Each deepfake detection system uses different techniques to spot manipulated content. DeepBrain AI’s deepfake detection process leverages a multi-step method to verify authenticity:

This multi-step approach allows DeepBrain AI to thoroughly analyze videos, images, and audio to determine if they are genuine or artificially created.

The accuracy of DeepBrain AI’s deepfake detection technology varies as the technology develops, but it generally detects deepfakes with over 90% accuracy. As the company continues to advance its technology, this accuracy keeps improving.

DeepBrain AI's current deepfake solution focuses on rapid detection rather than preemptive blocking. The system quickly analyzes videos, images, and audio, typically delivering results within 5–10 minutes. It categorizes content as "real" or "fake" and provides data on alteration rates and synthesis types.

Aimed at mitigating harm, the solution does not automatically remove or block content but notifies relevant parties like content moderators or individuals concerned about deepfake impersonation. The responsibility for action rests with these parties, not DeepBrain AI.

DeepBrain AI is actively working with other organizations and companies to make preemptive blocking a possibility. For now, its detection solutions help review suspicious content and assist in investigating fake deepfake videos to reduce further harm.

Major tech companies are actively responding to the deepfake issue through collaborative initiatives aimed at mitigating the risks associated with deceptive AI content. Recently, they signed the "Tech Accord to Combat Deceptive Use of AI in 2024 Elections" at the Munich Security Conference. This agreement commits firms like Microsoft, Google, and Meta to develop technologies that detect and counter misleading content, particularly in the context of elections. They are also developing advanced digital watermarking techniques for authenticating AI-generated content and partnering with governments and academic institutions to promote ethical AI practices. Additionally, companies continuously update their detection algorithms and raise public awareness about deepfake risks through educational campaigns, demonstrating a strong commitment to addressing this emerging challenge.

While major tech companies are making strides to combat deepfakes, their efforts may not be enough. The vast amount of content on social media makes it nearly impossible to catch every instance of manipulated media, and more sophisticated deepfakes can evade detection for longer periods.

For individuals and organizations seeking additional protection, specialized solutions like DeepBrain AI offer a valuable layer of security. By continuously analyzing internet media and tracking specific individuals, DeepBrain AI helps mitigate the risks associated with deepfakes. In summary, while industry initiatives are important, a multi-faceted approach that includes specialized tools and public awareness is essential for effectively tackling the deepfake challenge.